Dassault Systemes BIOVIA Pipeline Pilot 2024.1

In the sciences community, there is a growing need for information technologies capable of manipulating and analyzing large quantities of data in real time. Every aspect of the R&D process is being industrialized, and the reliance on automated methods has dramatically altered the research environment. Research data is doubling in volume every 12 months, creating a flood of new data from both internal and external sources. This presents tremendous challenges in the integration, management, and analysis of this valuable data, and it has led to a gross underutilization of the data.

In the sciences community, there is a growing need for information technologies capable of manipulating and analyzing large quantities of data in real time. Every aspect of the R&D process is being industrialized, and the reliance on automated methods has dramatically altered the research environment. Research data is doubling in volume every 12 months, creating a flood of new data from both internal and external sources. This presents tremendous challenges in the integration, management, and analysis of this valuable data, and it has led to a gross underutilization of the data.

Pipeline Pilot Client was designed with the vision that it is possible to address these challenges with a new approach to the way research data is handled and analyzed. This approach, known as "data pipelining", uses a data flow framework to describe the processing of data.

Data pipelining is the rapid, independent processing of data points through a branching network of computational steps. It has several advantages over conventional technologies, including:

- Flexibility: Each data point is processed independently, allowing processing to be tailored to each record.

- Speed: Highly optimized methods allow rapid, real-time analysis of thousands (or millions) of data points.

- Efficiency: Individual processing of data points limits memory use so that many protocols can be executed simultaneously.

- Ease of use: Protocols are easy to construct, with visualization that exposes key data processing steps.

- Integration: Data pipelining is a powerful tool for connecting the different data sources, databases, and applications required in a variety of scientific enterprises.

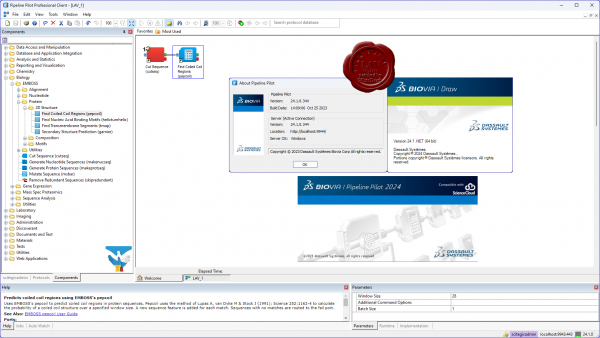

Pipeline Pilot provides environments to design, test, and deploy data processing procedures called protocols. A protocol comprises a set of components that perform operations such as data reading, calculation, merging, and filtering. The connections between components define the sequence in which data is processed. Data from files, databases, and the web is merged, compared, and processed, according to the logic of the protocol.

Constructing protocols is made easy with a graphical drag-and-drop interface. The work environment is divided into windows. On the left, the Explorer window shows the contents of the database of available components and prebuilt protocols. On the right, the workspace provides a way to create new protocols by dropping and connecting components.

A visual representation makes it simple to understand critical data processing steps in a potentially complicated procedure. Components are displayed as function-specific icons clearly identified with descriptive labels. Data records are passed between components through pipes represented by gray lines.

You can read data from databases or files, calculate new properties, filter records, and view results. You can save new protocols in the database of components and publish them for enterprise-wide sharing and reuse.

The best practices of your domain experts are automatically captured as they use Pipeline Pilot in their daily work.